Overview

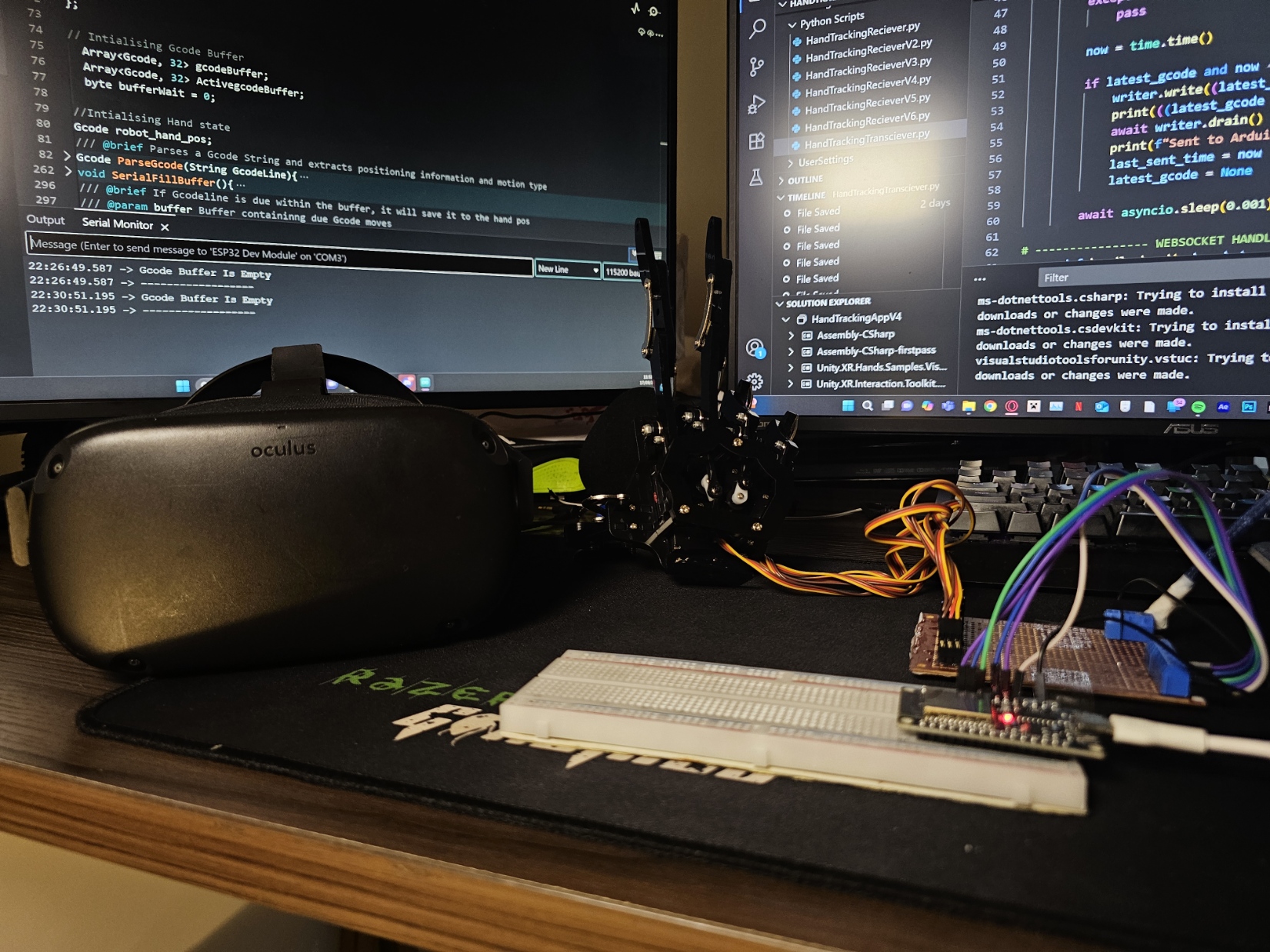

A Robot Hand that mimics your own hand motion using the original Meta Quest's Hand-tracking.

I created this system as a proof of concept to myself and others, as personally I'm very interested in robotic limbs, and I wanted to practice control over such systems.

The project was initiated after I received a robot hand on my birthday. I wanted to demonstrate that a project like this was easily feasible.

Over 2–3 days, I developed the system.

While it works, there is still room for improvement, especially in the joint calculation algorithm/script. However, for now, this serves as a proof of concept, which I am satisfied with.

Demo Showcase

Features

- Real-time mimicry of hand motions on a robotic hand

- Wireless data transfer from Meta Quest to PC using WebSockets

- Servo control via ESP32 for precise finger movements

- Custom G-code parsing for easy integrations with other systems

Components & Linked Tech Stack

-

Unity App (Hand Tracking & Data Processing)

- Tech Stack: Unity, C#, Meta Quest SDK, WebSockets

- Function: Collects joint information from the Meta Quest, calculates joint angles, and sends data over LAN.

-

Python Script (Data Receiver & G-code Generator)

- Tech Stack: Python, Asyncio, WebSockets

- Function: Receives joint data from Unity, processes it, and generates G-code instructions for the robot hand.

-

ESP32 (Servo Controller)

- Tech Stack: C++ / Arduino, ESP32 Servo and Array libraries

- Function: Parses G-code commands and controls the finger servos in real-time.

Challenges & Learnings

The workflow was split into two main stages and one minor stage:

- Joint Control

- Joint Data

- Network Communication (minor stage)

Joint Control

The robot hand consisted of five servos, one for each finger. Controlling all five servos simultaneously without delay on a regular Arduino UNO was difficult due to the way the standard servo library works and the UNO's hardware limitations.

Switching to an ESP32 allowed me to assign a timer to each servo individually. Which sorted out most of the issues I had.

Now with a simple range loop working on all fingers at the same, it came to control the position of each finger individually via commands.

For this I borrowed a G-code communication protocol I had made for a separate project, and integrated it into the robot hand's firmware, I just had to add special keywords such as "finger" and "index" instead of axis like "X" or "A". This works, because this is a custom system, and won't be dealing with standard CNC software.

Joint Data

This stage was by far the most challenging. I decided to use the original Meta Quest to track hand motion, thinking this wasn't going to be difficult I jumped straight into Unity, with my last experience with unity being around 2019, this still wasn't a challenge since I wasn't going to be doing any real game development, but merely interacting with Meta's APIs to get the headset's hand-tracking data.

Getting the SDK to interact with the Meta's API was the real hassle. ChatGPT was simply not offering accurate, consistent, and coherent information on the SDK, skimming through the internet on the other hand was much more promising, but still wasn't so easy, since the information was either too outdated or too new. Despite, this was within my expectation since the original Meta Quest had been discontinued and deprecated for years.

Eventually using the oldest version of Meta's all-in-one SDK, I had managed to get the hand tracking to work.

I then asked ChatGPT to write for me the C# scripts using meta's API to collect joint position information, to calculate the angles, and find the mean flexion, and send that over.

ChatGPT unfortunately couldn't provide reliable code (this is common when using dealing with 3D vector code), so I had to review, and tell ChatGPT where it's mistakes were till it made an acceptable version to use.

In the case that I ever need to seriously make the joint calculation more reliable, I will write the C# script myself. Although I will note that, had the joint calculation been more reliable, the original Meta Quest's tracking was still the main bottleneck here.

Network Communication

This minor stage was simpler. The Python script received joint data, processed it, and sent it to the ESP32. On the Unity side, a C# script handled sending the data via LAN. Parameters were added to map joint data to servo ranges accurately.

What I had learned

- Unity workflow and integration with external APIs.

- How the Arduino servo library handles multiple servos.

- Network communication using WebSockets and TCP.

Some advice to you the reader!

AI tools can speed up workflow and provide useful guidance, but they are not perfect. Always:

- Understand the code AI generates.

- Verify logic and math calculations manually.

- Check official documentation for libraries and APIs.

Using AI can help you work faster, but the final implementation requires careful review, don't let it make the final product sloppy.